The year of Linux on the desktop is whatever year you personally switched over.

Alex

FLOSS virtualization hacker, occasional brewer

- 4 Posts

- 57 Comments

3·3 months ago

3·3 months agomu4e inside my Emacs session.

2·3 months ago

2·3 months agoI’ve generally been up front when starting new jobs that nothing impinges my ability to work on FLOSS software on my own time. Only one company put a restriction in for working on FLOSS software in the same technical space as my $DAYJOB.

6·3 months ago

6·3 months agoThe article mentioned there is a long history of forks in the open source Doom world. It seems the majority of the active developers just moved to the new repository.

11·4 months ago

11·4 months agoI helped with the initial Aarch64 emulation support for qemu as well as working with others to make multi-threaded system emulation a thing. I maintain a number of subsystems but perhaps the biggest impact was implementing the cross compilation support that enabled the TCG testing to be run by anyone including eventually the CI system. This is greatly helped by being a paid gig for the last 12 years.

I’ve done a fair bit of other stuff over my many decades of using FLOSS including maintain a couple of moderately popular Emacs packages. I’ve got drive by patches in loads of projects as I like to fix things up as I go.

I didn’t know who Kirk was until the assassination I have better things to do with my limited time than go on a deep dive into their history before posting any comment on the news. I kinda got the vibe when I realised that was who Cartman was based on in the recent South Park.

2·5 months ago

2·5 months agoWas it before or after Oracle acquired Sun that the fork happened? I’m fairly sure it was Oracle that passed the project across to Apache and I have no idea why the Apache foundation accepted it.

101·5 months ago

101·5 months agoYeah I don’t think this is an ncdu issue but something is broken with the OPs system.

9·6 months ago

9·6 months agoI’ve long avoided npm but attacks on PyPi are a worry.

1·6 months ago

1·6 months agoI’ve got an Ampere workstation (AVA) which from a firmware point works fine. They may even fix the PCIe bus on later versions.

4·6 months ago

4·6 months agoAsahi is a powerful example of what a small well motivated team can achieve. However they are still face the sisyphean task of reverse engineering entirely undocumented hardware and getting that upstream.

If you love Apples hardware then great. Personally when I have Apple hardware I just tweak the keys to make it a little more like a Linux system and use brew for the tools I’m used to. If I need to I can always spin up a much more hackable VM.

1·6 months ago

1·6 months agoArm has been slowly pushing standardisation for the firmware which solves a lot of the problems. On the server side we are pretty much there. For workstations I’m still waiting for someone to ship hardware with non-broken PCIe. On laptops the remaining challenge is power usage parity with Windows and the insistance of some manufacturers to try and lock off EL2 which makes virtualization a pain.

Sorry to hear that. Good luck finding a new gig without needing to interact with Teams again.

I used to update my tickets from Emacs org-mode where I kept my working set off knowledge. The org export functions dealt with whatever format Jira expects. Nowadays I’m mostly tracking stuff so my comments are generally never more than a “thanks”, 👍 or occasionally a link to the patch series or pull requests.

Jira is alright, not great, not terrible. You need something to track projects and break down work and say least being ubiquitous a lot of people are familiar with it.

Teams is a dumpster fire of excrement though.

What do the inputs and configuration drop down menus say?

I remember the old ADSL modems where effectively winmodems. I had to keep a Windows ME machine as my household router until the point the community had reversed engineered them enough to get them working on Linux.

At least they where usb based rather than some random card. I think the whole driver could work in user space.

3·8 months ago

3·8 months agoVirtIO was originally developed as a device para-virtualization as part of KVM but it is now an OASIS standard: https://docs.oasis-open.org/virtio/virtio/v1.3/virtio-v1.3.html which a number of hypervisors/VMM’s support.

The line between what a hypervisor (like KVM) does and what is delegated to a Virtual Machine Monitor - VMM (like QEMU) is fairly blurry. There is always an additional cost to leaving the hypervisor to the VMM so it tends to be for configuration and lifetime management. However VirtIO is fairly well designed so the bulk of VirtIO data transactions can be processed by a dedicated thread which just gets nudged by the kernel when it needs to do stuff leaving the VM cores to just continue running.

I should add HVF tends to delegate most things to the VMM rather than deal with things in the hypervisor. It makes for a simpler hypervisor interface although not quite as performance tuned as KVM can be for big servers.

2·8 months ago

2·8 months agoNo the Apple hypervisor is called hvf, but projects like rust-vmm and QEMU can control and service guests run on that hypervisor. No KVM required.

I think the OP’s analysis might have made a bit of a jump from overall levels of hobbyist maintainers to what percentage of shipping code is maintained by people in their spare time.

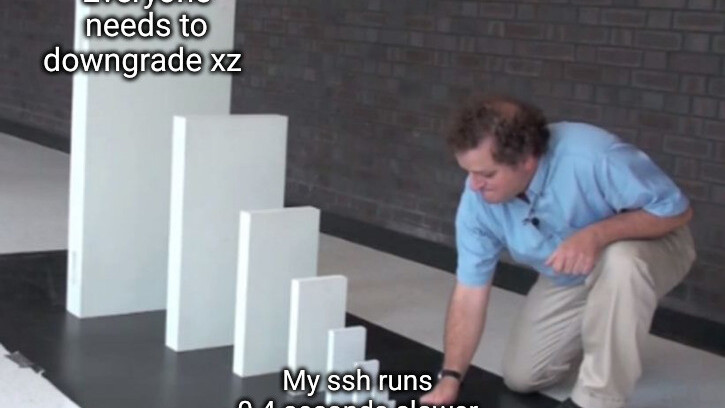

While the experiences of OpenSSL and xz should certainly drive us find better ways of funding underlying infrastructure you do see a higher participation rates of paid maintainers where the returns are more obvious. The silicon vendors get involved in the kernel because it’s in their underlying interests to do so - and the kernel benefits as a result.

I maintain a couple of hobbyist packages on my spare time but it will never be a funded gig because comparatively fewer people use them compared to DAYJOB’s project which can make a difference to companies bottom lines.