☆ Yσɠƚԋσʂ ☆

- 544 Posts

- 513 Comments

2·6 days ago

2·6 days agoI honestly kinda prefer older civ games because they were simpler and more focused. For me, Civ3 might really be the peak of the series.

it looks nearly identical to a cube I had at one of my jobs as well, I think even the phone is the same

11·9 days ago

11·9 days agoThe funniest thing for me is that humans end up doing the exact same thing. This is why it’s so notoriously difficult to create organizational policies that actually produce desired results. What happens in practice is that people find ways to comply with the letter of the policy that require the least energy expenditure on their part.

I can recommend PhotoGIMP which makes GIMP UI fairly close to Ps.

3·18 days ago

3·18 days agoIt would be nice if they made their stuff more open source friendly, like publishing specs alone would go a long way.

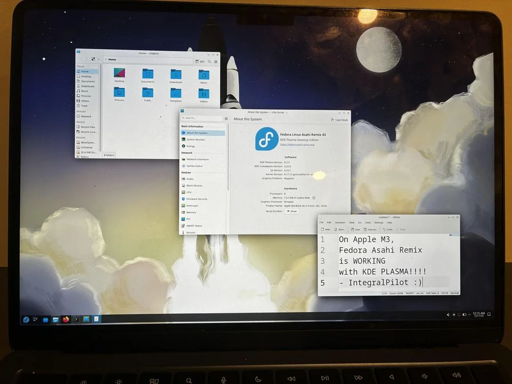

18·20 days ago

18·20 days agoYeah, Linux makes macs a lot more appealing.

3·28 days ago

3·28 days agoyou have to download the repo and you need python installed, in the project folder you’d run

python -m venv venv source venv/bin/activate # On Windows: venv\Scripts\activate pip install -r requirements.txtand then you should be able to run

python create_map_poster.py --city <city> --country <country> [options]

1·30 days ago

1·30 days agoNot necessarily, the models can often be tricked into spilling the beans of how they were trained.

3·30 days ago

3·30 days agoExactly, open models are basically unlocking knowledge for everyone that’s been gated by copyright holders, and that’s a good thing.

1·30 days ago

1·30 days agoYou can demand it but it’s not an pragmatic demand as you claim. Open weight models aren’t equivalent to free software, they are much closer proprietary gratis software. Usually you don’t even get access to the training software and the training data and even if you did it would take millions of capital to reproduce them.

This is a problem that can be solved by creating open source community tools. The really difficult and expensive part is doing the initial training.

You can put into your license whatever you want but for it to be enforceable it needs to grant licensee additional rights they don’t already have without the license. The theory under which tech companies appear to be operating is that they don’t in fact need your permission to include your code into their datasets.

There have been numerous copyleft cases where companies were forced to release the source. There’s already existing legal precedent here.

3·30 days ago

3·30 days agoThe actual problem is the capitalist system of relations. If it’s not AI, then it’s bitcoin mining, NFTs, or what have you. The AI itself is just a technology, and if it didn’t exist, capitalism would find something else to shove down your throat.

21·30 days ago

21·30 days agohere are just a few

- https://interestingengineering.com/innovation/ai-predicts-nuclear-fusion-plasma-failure

- https://www.scmp.com/news/china/science/article/3332736/central-china-ai-telling-humans-how-build-high-speed-rail-tunnel

- https://www.xinhuanet.com/english/2020-02/18/c_138795661.htm

- https://www.newscientist.com/article/2511954-amateur-mathematicians-solve-long-standing-maths-problems-with-ai/

- https://mathstodon.xyz/@tao/115855840223258103

- https://www.caltech.edu/about/news/ai-program-plays-the-long-game-to-solve-decades-old-math-problems

11·30 days ago

11·30 days agoThis is the correct take. This tech isn’t going away, no matter how much whinging people do, the only question is who is going to control it going forward.

Hopefully this stuff pans out. I’d love see it happen.

This is existing high performance hardware that you can buy. I’d love for there to be something equivalent built using RISCV, but there’s not.

I haven’t actually tried that. I got it running on my M1, but only used it with the laptop screen.

My view is that all corps are slimy, some are just more blatant about it than others. I do agree that Apple stuff tends to be overpriced, and I’ve love to see somebody else offer a similar architecture using RISCV that would target Linux. I’m kind of hoping some Chinese vendors will start doing that at some point. What Apple did with their architecture is pretty clever, but it’s not magic and now that we know how and why it works, seems like it would make sense for somebody else to do something similar.

The big roadblock in the west is the fact that Windows has a huge market share, and the market for Linux users is just too small for a hardware vendor to target without having Windows support. But in China, there’s an active push to get off US tech stack, and that means Windows doesn’t have the same relevance there.

Exactly, and there is already some work happening in that regard. This project is focusing on making a high performance RISCV architecture https://github.com/OpenXiangShan/XiangShan

I really hope the project doesn’t die, they had some people leave recently and there was some drama over that. Apple hardware is really nice, and with Linux it would be strictly superior to macos which is just bloated garbage at this point. I’m also hoping we’ll see somebody else make a similar architecture to M series using ARM or RISCV targeting Linux. Maybe we’ll see some Chinese vendors go RISCV route in the future.

Not that I’ve seen.